Whoah! It’s been awhile since my last post. In that time SimpliFit launched the SimpliFit Weight Loss Coach for Android and iOS, the SimpliFit Coaching program, and, just last week, Magical, a texting-based picture calorie tracker. Yes, we’ve been quite busy! And it’s given me a lot more content for future blog posts.

Today, though, I’ll build on my previous post, Validating iOS In-App Purchases With Laravel, by covering Android in-app purchases (IAPs) in Laravel. The process was completed for a Laravel 4 app, but the code I’ll be showing can be used in Laravel 5 as well.

The workflows between iOS and Android are fairly similar, and just as working with iOS IAPs was frustrating due to lackluster documentation, working with Android IAPs is just as frustrating. If Apple and Google would take the best parts of each of their IAP systems, you would get quite a good system. As it stands, though, both systems make you wish your app didn’t have IAPs.

But if you’re reading this, then you probably have the unenviable task of adding IAP verification to your app. First, I’d recommend you get familiarized with Google’s In-app Billing documentation and skim the Google Play Developer API. Also, I’m assuming that you’ve already created your app in your Google Play Developer Console and added the in-app products you’ll be offering.

On the front-end, we are again using the Cordova Purchase Plugin to mediate between our app and Google Play via Google’s In-App Billing Service. Since the SimpliFit app only had a monthly subscription (we since made the app free), I’ll be discussing how to work with in-app auto-renewing subscriptions, however this guide can easily be applied to a one-time IAP.

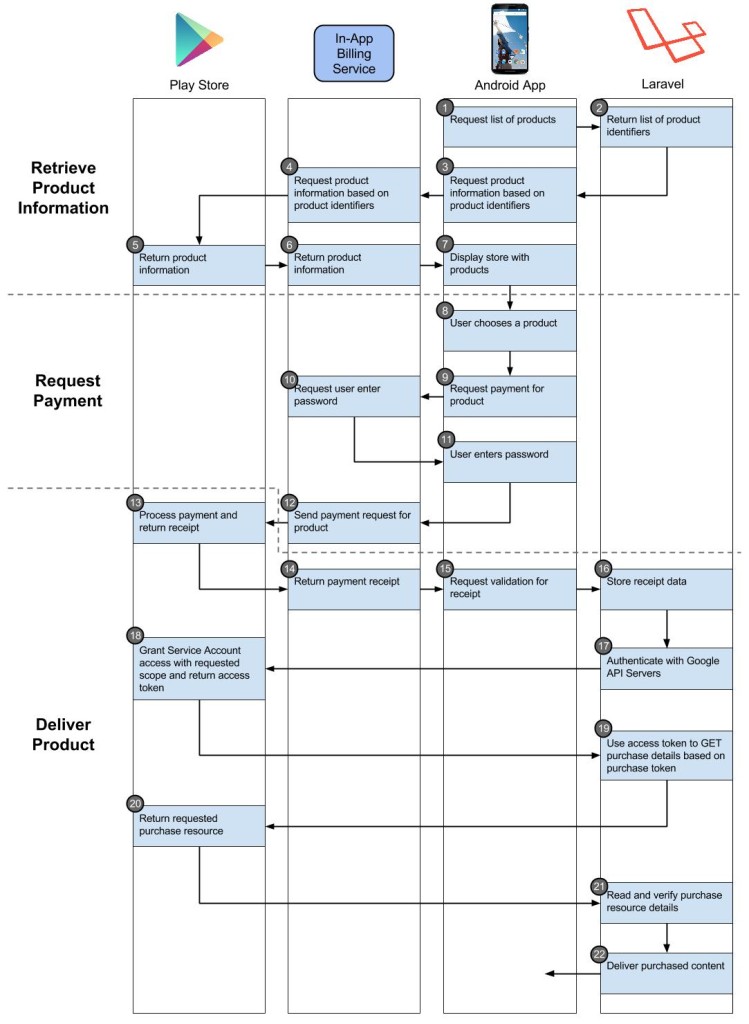

Android IAP Workflow

Just as with iOS, we have three IAP stages: 1) Retrieve product information, 2) Request payment, and 3) Deliver the product.

Stage 3 was the most involved for Laravel for iOS IAPs, and for Android the steps increase. Take a look: